Managing The SSD Life Cycle In A Data Center

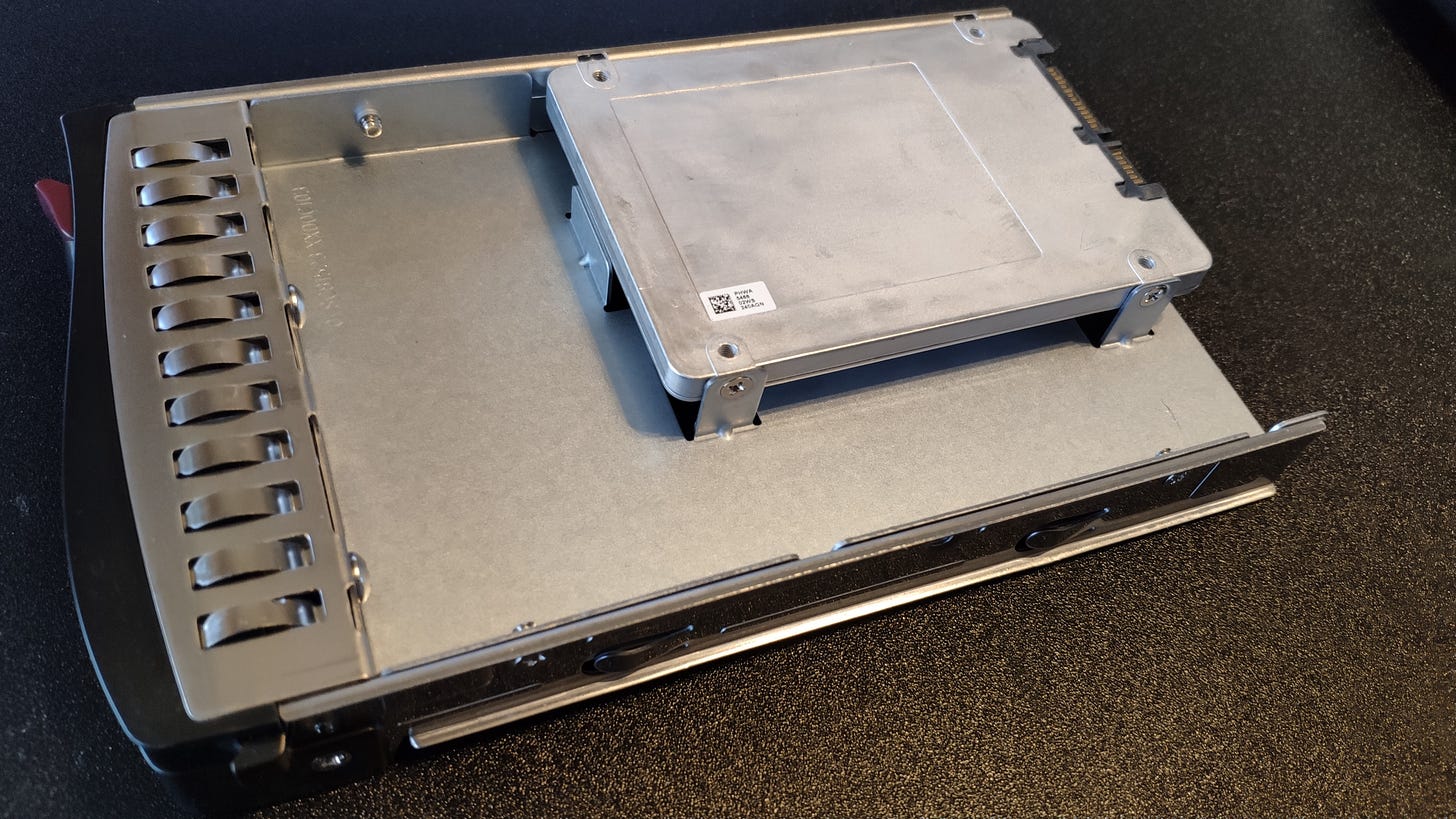

Image courtesy of the author.

If you manage or buy server hardware, you will be buying Solid State Drives (SSD) these days. Spinning platters are a thing of the past; they are good enough for personal computers, but unable to withstand the harsh data center environment. But before you spend $50,000 on SSD drives, you need to know what to expect. Or, in other words, how long should your drives last?

Site Reliability Engineers need to answer these types of questions. There are many people inside an organization who may need to know this ranging from hardware teams that know every nuance of SSD drives, all the way over to the finance department who just needs to know how you came up with this dollar amount for drive replacement this year.

The lifespan of an SSD drive can’t be measured solely in time because the workload of the drive plays a big part. A drive that is used for booting a server will last a very long time because it has minimal read and writes occurring. On the other hand, a drive that is used for a CDN cache will have a much shorter life span because it is constantly being read from and written to. It is for this reason that SSD drive endurance and lifespan is expressed in how much data can be written to it.

The reason SSD drives are so much faster than spinning platter drives is because SSD drives use technology more similar to RAM than moving disks. When a hard drive is asked to retrieve data, it has to do a lot of mechanical movement to find it. An SSD drive doesn’t move at all. It just looks up the data from its static memory chips which is a much faster process.

To determine how much use you will get out of an SSD drive you need to know three basic things:

How big is the drive?

How long is the warranty?

What is the manufacturer’s endurance rating?

Manufacturer’s endurance rating terminology

The manufacturer’s endurance rating will be expressed in one of three ways:

Drive Writes Per Day (DWPD):

This is a rating of how many times the drive could be entirely written over every single day of its life before the manufacturer will no longer guarantee its performance. Which is just another way to say “warranty period”. If the entire drive is written over less than this value every day, then the drive will work for its stated warranty period.

Drive endurance is generally no longer expressed using DWPD, but I find it helpful to reduce the TBW/PBW numbers back to DWPD numbers (more on those two methods below).

Terabytes Written (TBW)

This is a rating of how many TBs of data can be written to the drive over its entire life within its warranty period and still perform as expected.

Note that TBW can be expressed in Terabytes or Petabytes and is usually annotated like TBW(TB) or TBW(PB) to indicate form which is being used.

Petabytes Written

This is the same as TBW, but expressed in Petabytes to accommodate today’s larger drives.

The TBW and PBW are more useful metrics because we’re dealing with just a single metric — how much data, period. The DWPD leaves open questions because of how it presents writes based on days.

Warranty period

I think we all understand the concept of a warranty period, but it’s worthwhile quickly touching on it in this context.

We should never plan for our hardware to remain in service past its warranty date. — Every SRE Ever

Warranty is the time frame the manufacturer thinks the product will function acceptably. In personal life, I think we all like stuff that outlives its warranty. But, we don’t want that in the data center. We should never plan for our hardware to continue past the warranty date because equipment becomes unpredictable at that point. Relying on an out of warranty drive could cause unexpected outages or, even worse, perhaps damage to some other part of the server. Always forecast your drive replacements based on the warranty period.

Drive capacity

Now that we understand that the amount of data written and the warranty period, it becomes more obvious how drive capacity factors into the lifespan calculation.

Consider two drives with the same warranty period (5 years) and the same endurance rating (8760 TBW), but different capacities. A 960GB drive will have a DWPD rating of 5. A 480GB drive will have a DWPD rating of 10.

Does that mean the 480GB drive has more endurance?

Technically, the drives have the same endurance because although the 960 has a smaller DWPD rating, it is writing to twice the space as the 480GB drive. Half the DWPD over twice the space is pretty much the same thing. So the answer lies in how you will use that drive. If it will be pressed into service in a high read/write situation, then the smaller drive would be a better choice. The downside is, of course, it’s a smaller drive.

Let’s play with the warranty period a bit. The standard warranty in the industry is 5 years, but what if it was a 7 year warranty? The 960GB drive then drops to 3.5 DWPD and the 480GB drive drops to 7.1 DWPD. That makes sense because we’re now spreading that 8670 TBW over a longer period, therefore less can be written per day.

Lest you think I am a mathematical savant, I will provide you with this link which makes these conversions easier:

https://wintelguy.com/dwpd-tbw-gbday-calc.pl

Calculate how long it will last

Now that we understand all the pieces, let’s get down to figuring out how long your drive will last. The best predictor of the future is the past, so hopefully you have some drives in service now that you can look at.

Auditing current drives

The two things you will want to understand about your current drives are:

What shape are they in now, and

When did they go into service?

What shape are the drives in now?

Smartmon Tools can help here and every Linux distribution I’ve come across has the tools installed or available in its repositories. There is a lot of criticism about how Smart Tools expresses drive wear. Some of it is valid, but in my experience it works “Good Enough”. Nothing can tell you with 100% certainty how long a drive will last. There are too many variables ranging from heat in the chassis to manufacturing tolerances. The best we can do is collect data and try to make supportable forecasts from it.

SSD drive wear is shown via the Media Wear Indicator in the Smart Tools output. I’ve had to truncate the output a bit because Medium’s formatting doesn’t show all the columns nicely.

/smartctl -A /dev/sdc | egrep 'MediaWearoutIndicator' ID# ATTRIBUTENAME VALUE WORST TYPE UPDATED MediaWearoutIndicator 0 95 095 Oldage Always

From this we can see that the /dev/sdc drive is in good shape at 95/100 wear. That’s good info, but we really don’t know how to evaluate it unless we also know how old the drive is.

How old is the drive?

Hopefully, you have some historical paperwork to tell you when your drives went into use. But, if not, you can make some educated guesses. I have several drives with no history, so I looked at the earliest file on them. These are Linux machines and I know that when we format the drives for use, a folder named lost+found is created. Because there’s no obvious reason for anyone to touch that folder, it is reasonable to assume that the date of that folder reflects the date the drive went into service.

You can build a myriad of scripts to determine the oldest file on a disk. Here’s one that checks the /, /boot, /var/log, and /var/cache partitions. I redirect the output to a file which ends up in CSV format because of the comma formatted output. It’s not elegant, but it need not be. Feel free to modify it for your own use:

echo -n hostnameecho -n “,smart_boot,“cd /boot/date -r ls -1t | sed "s/://" | tail -n1 “+%Y”

echo -n hostnameecho -n “,smart_cache,“cd /var/cache/date -r ls -1t | sed "s/://" | tail -n1 “+%Y”

echo -n hostnameecho -n “,smart_log,“cd /var/log/date -r ls -1t | sed "s/://" | tail -n1 “+%Y”

echo -n hostnameecho -n “,smart_slash,“cd /date -r ls -1t | sed "s/://" | tail -n1 “+%Y”

It gives output like this:

server1,smartboot,2016 server1,smartcache,2014 server1,smartlog,2014 server1,smartslash,2015

Assuming each of these partitions are on a different drive, we can see that we have some drives that are out of, or just about to fall out of the typical 5-year warranty window.

Put it all together

Now we have a good idea of our drive life. Using good old /dev/sdc — which is mounted on /var/log — I can see that even though the drive has lots of wear left, it is outside of the warranty period. Therefore, I am at risk of failures regardless of the drive wear left, so it should go on my replacement list.

With this data we can see that the drive model I’m using on /dev/sdc is obviously overkill. It should not have 95% wear left after 6 years. This is an opportunity to review the drive specs and perhaps save some money replacing it with a drive with less endurance. What I am looking for is a drive about 4 years old with about 20% wear left. That is the sweet spot of efficiency. It is a drive that will likely last the warranty period, but not too long after. It is a drive that I am spending the “right” amount of money on. Even if I don’t encounter a drive like that in my inventory, I can at least look at the specs and wear of the drives I do have to give me some upper and lower limits to work within to help source a more suitable drive.

These tools and concepts should give you enough basis to determine the drive replacement cadence you will need to establish. I use variations of these script and tools to build CSV files that I can share with almost everyone in the company, regardless of their technical expertise. I can show the hardware teams how I determined that the current drives we’re buying are (or are not) sufficient for our needs. I can also show the less technical procurement people how I determined that I need $X budget for drive replacement this year.

my shorter content on the fediverse: https://cosocial.ca/@jonw