Internet Folklore: The Cult of ATDT

I always get a bit nostalgic this time of year. I think it is a combination of my impending birthday and the fact that we’re socked deep into the Canadian winter in February and my work from home lifestyle has made me utterly stir crazy by now. Whatever the reason, today I’m reflecting on how I got where I am in my career, and acknowledging that almost all of it has to do with a passion for technology rather than any kind of career plan I had for myself. I never did, and don’t now have any idea what I want to do in a few years. I’m just one of those lucky people that does what they love and the career followed. These days, $DAYJOB dictates what I learn next, but that wasn’t the case in the early years. In those days, everything was wide open and new and exciting. This post reflects on those early pre-internet years.

198…3?

I can’t remember the circumstances surrounding this, nor the actual year, but in the early 80s, my parents bought a Commodore Vic 20 computer. It was ostensibly for “the family” but nobody had any interest in it but me, and it quickly moved from a central point in the house where everyone could access it to my bedroom. As one of the first computers aimed at regular people instead of businesses, it did not come with a monitor. It was designed to hook up to an existing TV set via an RF toggle just like the gaming consoles of the era did. Some time prior to buying the Vic 20, my parents had bought us all our own small black and white TVs for our rooms so that is what I used for the Vic 20 monitor. I can’t remember if the computer had color or not, but I never saw it in any case.

Initially, my main use of the Vic 20 was gaming. And of those, the game I remember the most was Adventureland. It was a text-based adventure game where you have to perform certain actions in a certain sequence to get to the end. I played it for hours and I did reach the end a few times, but I can’t remember the details now. I do remember a bear, chiggers, and a gas bladder, perhaps not in that order.

Somewhere along the way, I discovered the BASIC programming language. A friend of mine down the road had a Commodore 64 and a disk drive. It was much faster to write and retrieve code to the disk drive instead of the dataset (tape recorder) my Vic 20 came with (image below). Plus he had a color TV and…gasp….a 300 baud acoustical modem. I moved out of the area after a few years and have never seen this buddy in real life since, but those few years were packed with programming and BBS’ing and set the stage for a life-long love of technology.

Around the same time, my junior high school (grades 7-9) went on a major computer bender. It borrowed a Volkswagen-sized Hewlett Packard card reader from somewhere and my math class was put on hiatus for a month while we learned what to do with it. I still remember my math teacher, Mr. Wareham, asking us to instruct him how to stand up from a sitting position, step by step, as a learning tool to understand the type of discrete directions we’d have to provide to a computer in order to make it do anything. We then started penciling in the cards, shoving them into the computer, and marveling over the little ticket tape response that printed out showing the results of our work.

Shortly after that, two or three Commodore PET personal computers showed up in a slightly large-ish closet that became “the computer room” at school. It had a sign-up sheet to control the rush of students who wanted to use them. But, much like the Commodore Vic 20 in my house, these Commodores also weren’t all that popular so the reality was that I could use them whenever I wanted.

Around that time I got my hands on a BASIC programming book. Although I had already been programming rudimentary games in BASIC, I had no “formal” training. That book introduced me to PEEKs and POKEs which elevated my programming to a whole other level. I’d frequently run out of memory for my games on my Vic 20, but my buddy with the Commodore 64 was always ready to hack away, and we built some reasonably impressive games for a couple of kids. We even sent one to Thorn EMI but they rejected it. In retrospect, it was nice to get a response at all.

My family then moved across the country and the Vic 20 disappeared somewhere, and my interest in computers went with it for a few years.

199…0?

By this time I had failed to graduate high school, had spent many years running with the “wrong crowd” but somehow found my footing again and was the sous chef for a successful mid-range casual dining chain. Somewhere around this same time, my interest in computing was rekindled and I bought a used laptop, or what we called a “luggable” in those days. It had a 20MB hard drive and a monochrome blue VGA monitor. It came with Windows 3.0 installed and my mother-in-law lent me her Windows 3.1 disks so I could get all modern. I remember installing DOS 6.0 and then installing Windows over top of that. But the important thing is that it had a 1200 baud internal modem. That was a reasonably fast modem in those days, especially for a portable computer that generally did not come with internal modems at all, so I was very pleased with it. I rekindled my love for the BBS scene with that brick.

DOS 6.0 was significant in that it had disk compression built-in. Disk compression was extremely important in this era because portable storage technology was young and disk drives were very small. Compressing data was necessary to make any system usable. The technology in DOS 6.0 was named DoubleSpace but it came to light later that it was really called Stacker and Microsoft had stolen the technology from a company named Stac which successfully sued Microsoft for that. However, none of those legal wranglings changed what was on the DOS 6.0 disks so life went on.

Around this time I realized that there wasn’t a lot of career opportunity in cheffing, so while I enjoyed the work and evening shifts, it grew old after several years and I left it to become the assistant manager of a fast-food chain. My luggable VGA beastie wasn’t faring very well by now. The built-in monitor had completely failed by this point and I was using it as a desktop with an external monitor plugged into it. The time had come to buy my first new computer.

In an outlet of the only computer store in town, a young Future Shop, I found a lot of computers, but none of them were recognizable. The days of the “Commodore” and the “Amiga” were gone and the shelves were lined with identical-looking beige boxes. I had no clue what I was looking for so I just bought what I thought would work. A 486DX/30 with a 2400 baud internal modem. My defunct luggable was a 386 something, so this was a step up all around. I briefly flirted with the idea of buying a 14.4Kbps modem, but it was prohibitively expensive so I settled for the 2400. It was around this time that I learned the difference between “baud” and “bits per second (bps)” but that is too boring for even me to go into now.

I took this thing home in many boxes and set it all up. Windows 3.1 came pre-installed so I did not have to mess around with DOS or Windows, and the hard drive was big enough that I don’t recall having any space issues. But what I did have was a Super VGA (SVGA) color monitor which was blowing my mind. It was with this computer that I discovered multi-line BBSes, online MUDs, real-time chat, internet shell accounts and email and newsgroups using tools like PINE and ELM. Internet shell accounts were available on some BBSes. More expensive graphical SLIP and PPP accounts were available sparingly, but beyond my means at the time.

Several years later I went on to college for a Computer Information Systems program, did a stint in the Navy, and my career properly started. But these BBS and early internet years was the era that laid the foundation for a love of technology. A lot of that technology is still in use today, albeit hidden behind the shiny exterior of the modern internet.

The Single-line BBS Years

There were hundreds of BBSes in my local area code in these years. Partially because the world was a smaller place and there were fewer area codes, but also because it took some technical chops to connect to a BBS and understand what to do with it. Therefore, a lot of BBS users were also BBS SysOps who ran their own BBS system in addition to being a user on others. It was a fairly technical crowd.

The vast majority of BBSes were one-line hobby boards and most of us had long lists of BBSes we liked because we knew that the chances of connecting to any given one were slight, so we’d move on to the next one in the list when we got a busy signal. Almost everyone used Windows in those days. There were a few people with Apple computers, but the Linux kernel didn’t even exist yet so there were zero *nix people outside of RMS’ Free Software group; a group nobody outside of academia had heard of at all. The class of software used to connect to BBS systems were serial terminal programs, but we generally just called them terminal programs. The pre-eminent terminal program of the day was Procomm Plus and it had all the features we ever wanted – speed dialing, dialing lists, and modem volume control.

Windows came with a very basic terminal program called HyperTerminal and the standard way of setting up a new computer was to use the built-in HyperTerminal program just long enough to log into a BBS and download a better terminal program. Much like people today use Internet Explorer on a new computer just long enough to download Firefox and then never use IE again.

The single-line BBSes were basically “drop by” stops. They had short session limits, usually 20 minutes, which was enough to check if anyone had left you mail and download some software. Although, in the slow 1200 baud and less days, it was not always possible to download an entire file in that time. The universe answered our prayers with a download protocol named ZModem. ZModem had a lot of benefits that we were oblivious to, save one. It supported resumable downloads. If you were terminated from a BBS for whatever reason, when you were able to log back in and download the file again, it would resume where it left off.

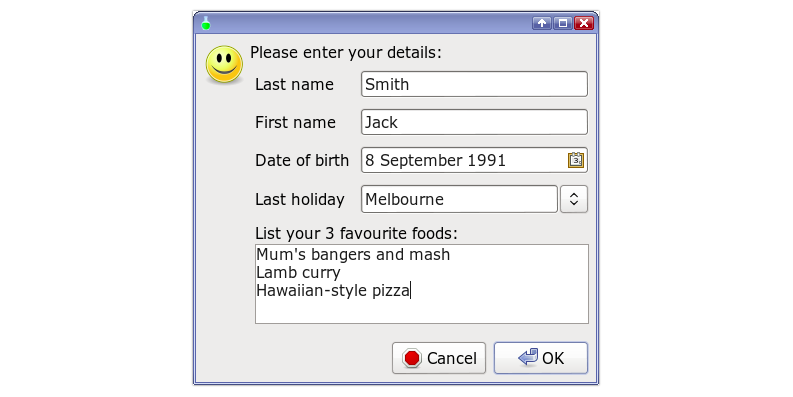

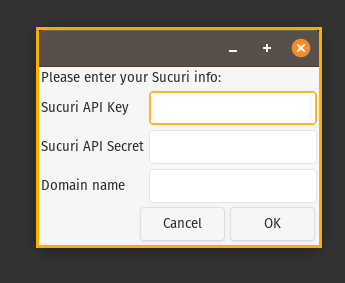

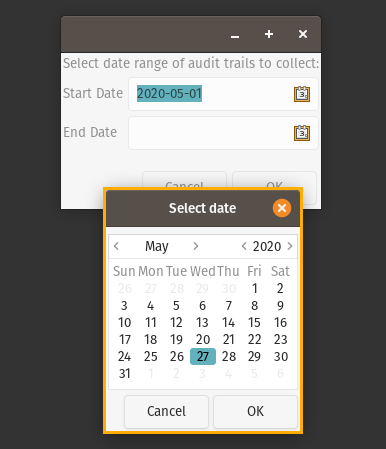

Most BBSes in those days participated in FidoNet, or other Fido Technology Networks (FTNs). These were messaging networks. A BBS owner could choose to install mailer and mail tossing software on their BBS which would allow it to exchange both public messages in forums, called “echoes”, or direct and private (ish) mail, called “netmail”. A BBS SysOp who wanted to join one of these networks had to apply to that area’s coordinator through some already connected BBS and provide basic information such as the node name, the node phone number, and swear to observe Zone Mail Hour (ZMH). ZMH is is the hour every night when FTN networks would call each other and collect/drop off messages. ZMH was sacred in those days – your BBS had to be available during that hour otherwise you would not get your messages that day and your users would be annoyed. Today, the internet is used to transfer messages, so ZMH is no longer needed nor enforced.

The Multi-line BBS years

Eventually, BBS software improved so that it could support multiple lines. A few enterprising people stood up multi-line BBSes. Early multi-line BBSes had a separate phone number for each node so you had to have multiple entries for those BBSes in your dialer. Only a very few, usually commercial boards had the technology and money to have a single number round-robin to an open node.

Initially, multi-line wasn’t such a big deal because all the multiple lines really did was increase your odds of being able to connect to the BBS. But soon developers realized that if you have multiple people online at the same time, why not allow them to interact? It was during those years that Multi-User Dungeons (MUDs) and real-time chatting came into being and changed the BBS scene forever, pushing it towards what the internet would eventually become.

My board of choice was a BBS named Nucleus in Canada. Nuke still exists as an ISP now, having shut down its BBS long ago. Nuke ran a very expensive and amazing piece of BBS software named MajorBBS by Galacticomm. It had capabilities no other BBS had, and even though there were other multi-line BBSes in the area, Nucleus became the gold standard and was able to collect subscriptions from us, a feat that few other BBSes had managed to accomplish. Nucleus used to co-locate with a book and table-top gaming store, The Sentry Box, but it has come a long way now.

Once we were able to interact with other users in real-time, the world opened up.

MUDs

MajorBBS had a ton of games built specifically for the platform and therefore out-performed most other games of the era. Keep in mind that these games were text-based and mostly variations of MUDs, although some had rudimentary ASCII graphics. My favorite of them all was a game called Mutants. It was during Mutants play that I learned about what modern-day gamers complain about: lag. But in a dial-up scenario, the lag is not due to internet congestion. There is no internet involved and you have a 1:1 direct connection to the BBS. The lag came from modem speed. Mutants made no attempt to homogenize user speeds so if you had a nice speedy 14.4 modem then you could literally run circles around someone with a 1200 baud modem in the forest. It had the potential to be ghastly unfair. While I distinctly remember users running by me so fast that the game only told me I heard them go by, I don’t have any memories of this being an actual problem during gameplay. I am not sure how that could be, but memories are fickle things.

Chat

I recall only three multi-line boards in my area code in those years, Nucleus, Octopode, and Chatline. I think Chatline was also a paid BBS but it was less gamey and more chatty, as the name suggests, which wasn’t really of interest to me so I had an account but did not use it much. I remember Octopode as being one of those multi-line BBSs with 8 different phone numbers – hence the Octo part of the name. I guess that made sense because it was a free BBS so the SysOps were footing the bill for those lines themselves somehow, and adding features like single phone number would push that bill even higher. Being a free multi-line BBS, Octopode was overrun with users even younger than myself at the time, and it was hard to find a free line. I did not spend much time on Octopode for those reasons.

MajorBBS chat had a lot of features that were familiar from IRC and also allowed some customization, such as custom entry messages. That was a fun feature – you’d be chatting away and “Suddenly, the fog clears and in walks Doglier, dragging his dog bowls behind him…” would happen.

A great deal of fun in chat came from sabotaging newbies that did not have a good understanding of how their modem worked. I am sure this is arcane knowledge again by now, so I will recap a bit.

Recall that modems negotiate a serial connection over the phone line and once that connection is established, everything you type is just pushed through the pipe to the other end. But you still need to maintain control of the modem to tell it to do things like hang up when you’re done. To allow users to send commands to the modem and also to the connected system, there needs to be some kind of signal so the modem knows to take some text as commands. That is the Hayes Command Set, also known as the AT command set.

Beginning a string with AT tells the modem to pay AT tention to what comes next because it is a modem command, not something to be sent through the pipe to the other end. The most common AT command is ATDTxxxxxx where x is the BBS phone number. ATDT means “ AT tention, D ial using T ouch-tones” which tells the modem to use tone dialing to dial the number. There is also an ATDP for D ial using P ulse, but I have never used that. Other useful commands are ATH0 which causes the modem to hang up, ATL0 which shuts the modem volume L evel to 0 (off), and sending a plain +++ with no AT in front which causes the modem to go into command mode entirely and stop sending data to through the pipe.

It probably becomes pretty easy to see the sport in tricking new users into typing things like ATH0 and punting themselves from the board. Another common trick was to macro-bomb a user off the board. By sending private messages at a rate faster than the receiver’s modem can handle, most modems will simply hang up. MajorBBS eventually introduced rate-limiting for private messages to prevent this, but it was a good working tactic to get rid of people who annoyed you for a long time.

Moving on

The next few years were magical all over again because graphical SLIP and PPP connections became affordable. Suddenly, we weren’t staring into a black terminal screen anymore. We were using things like web browsers and email clients. That was another wild time to live through, but I will leave that era for a different post.

I remember the modem and BBS era very fondly. I have a lifelong friend from those days that I met online and we’ve remained friends ever since, despite never having worked together or attended school together. My first contact with technology was that Vic 20 where I learned programming, and my friend’s Commodore 64 with a modem is where I learned that there was a whole world outside of my bedroom window that I knew nothing about, full of possibilities. That 300 baud acoustical modem kick-started an entire lifetime and career in technology.

Header image credit: By Lorax at English Wikipedia - Own work, Public Domain,

my shorter content on the fediverse: https://cosocial.ca/@jonw